Hello all,

It's week 5, which means that it's

that time of the quarter again! Miraculously though, somewhere between not getting any sleep, neglecting the sun, and drowning ourselves in textbooks and notes, we have managed to make some amazing

progress on our imminent usability tests.

Usability testing has been our primary focus for the majority of this quarter, and it's great to see things really coming together. Here is a summary of the focus points of our recent work in regards to this preparation:

- Creating several test apps for our human subject trials.

- Becoming certified and knowledgeable in the area of conducting research involving human subjects.

- Data mining information on how to conduct a productive and proper usability test.

- Creating a survey to give the tester before and after each usability test.

- Creating a rough script to be used during each test.

- Defining and fleshing out the descriptions of team roles.

- Researching into the specifics of conducting usability tests that involve participants who are visually impaired.

Our test apps deserve some more in-depth coverage; a bullet can only show so much, after all! We are very proud of the work that we've accomplished so far this quarter; I'm also extremely vainglorious about my modesty and vocabulary.

The center of attention has been our

test apps. We currently have two test apps; these apps differ only in the way that the user can zoom in-and-out of the content on the screen. We are currently experimenting with the standard

iPad "pinch" gesture, as well as with a less conventional

'tap-to-zoom' method. A preliminary draft of our UI is placed on each app, with the option to switch between the inverted and non-inverted icon sets. In addition, the "notes" icon will popup a textbox in which the user can take notes in real-time, using either the on-screen keyboard or an external USB keyboard.

The nature of our test apps will allow us to foster an environment during the tests that will provide us with a substantial amount of information on the key visual and usability components of our app; it will also allow us to better feel-out how users are likely to respond to certain key features. This information will be very valuable to have so early on in the life cycle of the project.

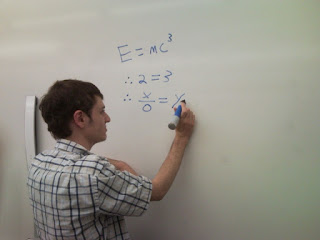

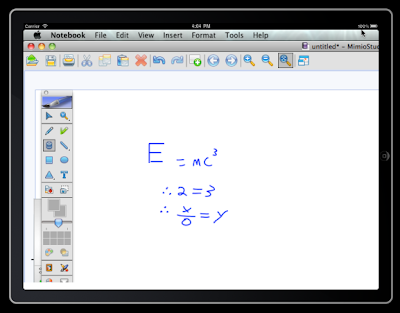

An image of the cur

rent version of our test app can be seen to the left, with the

inverted icon mode in effect. The large

image in the center is a custom-made image that is intended to mimic what the user may see if notes were being captured by our device during a real lecture. To make the image as authentic as possible, it combines digital images with handwritten notes; it is also (purposefully) too large for the iPad screen, and must be 'scrolled' around the iPad screen using one finger. This will allow us to test the ease of use in regards to navigation in a real-world setting.

The

toolbar on the top provides quick access to the home screen and the settings page, while the

bottom toolbar contains all of the tools immediately available at the board view. These tools are (from left to right), "Save Lecture", "Take Notes", "Selection Tool", "Inversion Toggle", "Zoom-Out", and "Zoom-In". As mentioned above, the "Take Notes" icon is functional.

I hope that this 'tour' of our usability test apps has been fun for you, or at least more exciting than what you were doing before!

Once the test survey and script and more fleshed out, and once more research has been conducted into VI usability testing, we will post a nice content filled update.

Until next week...

- Alex